Publications

Publications

Publication

<div class="row">

<div class="col-sm-2 preview"><figure>

</figure> </div>

<!-- Entry bib key -->

<div id="thuan2024" class="col-sm-8">

<!-- Title -->

<div class="title">Point Central Transformer Network for Weakly-Supervised Point Cloud Semantic Segmentation</div>

<!-- Author -->

<div class="author">

Anh-Thuan Tran</div>

<!-- Journal/Book title and date -->

<div class="periodical">

<em>In Master’s Thesis</em>, 2024

</div>

<div class="periodical">

</div>

<!-- Links/Buttons -->

<div class="links">

<a class="abstract btn btn-sm z-depth-0" role="button">Abs</a>

</div>

<div class="badges">

<span class="altmetric-embed" data-hide-no-mentions="true" data-hide-less-than="15" data-badge-type="2" data-badge-popover="right"

></span>

<span class="__dimensions_badge_embed__"

data-pmid=""

data-hide-zero-citations="true" data-style="small_rectangle" data-legend="hover-right" style="margin-bottom: 3px;"></span>

</div>

<!-- Hidden abstract block -->

<div class="abstract hidden">

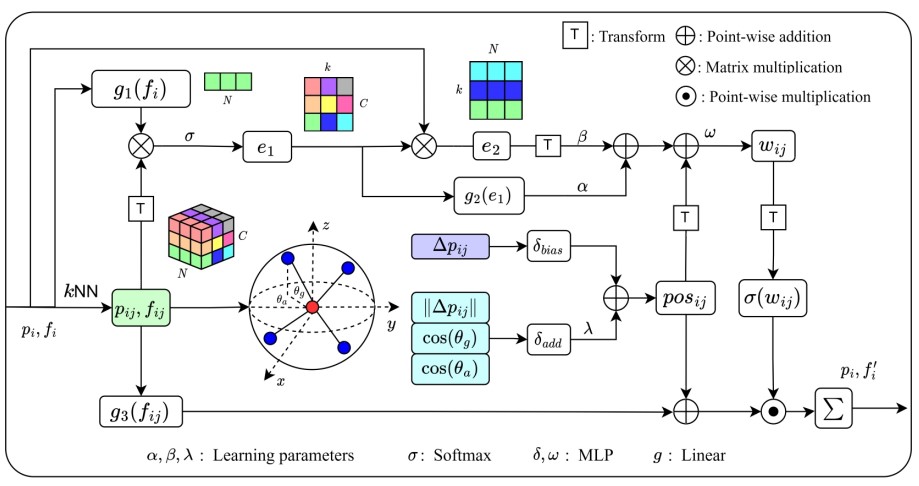

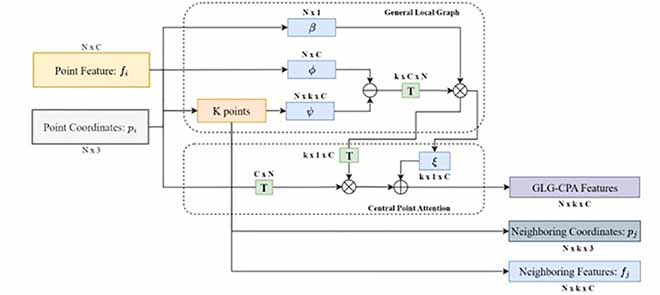

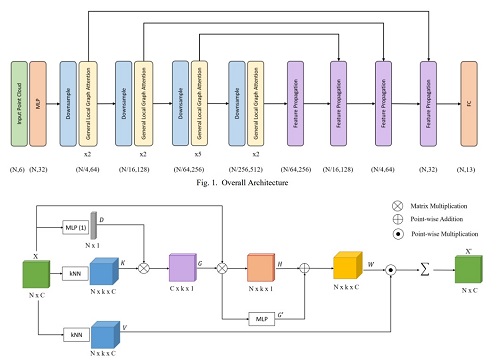

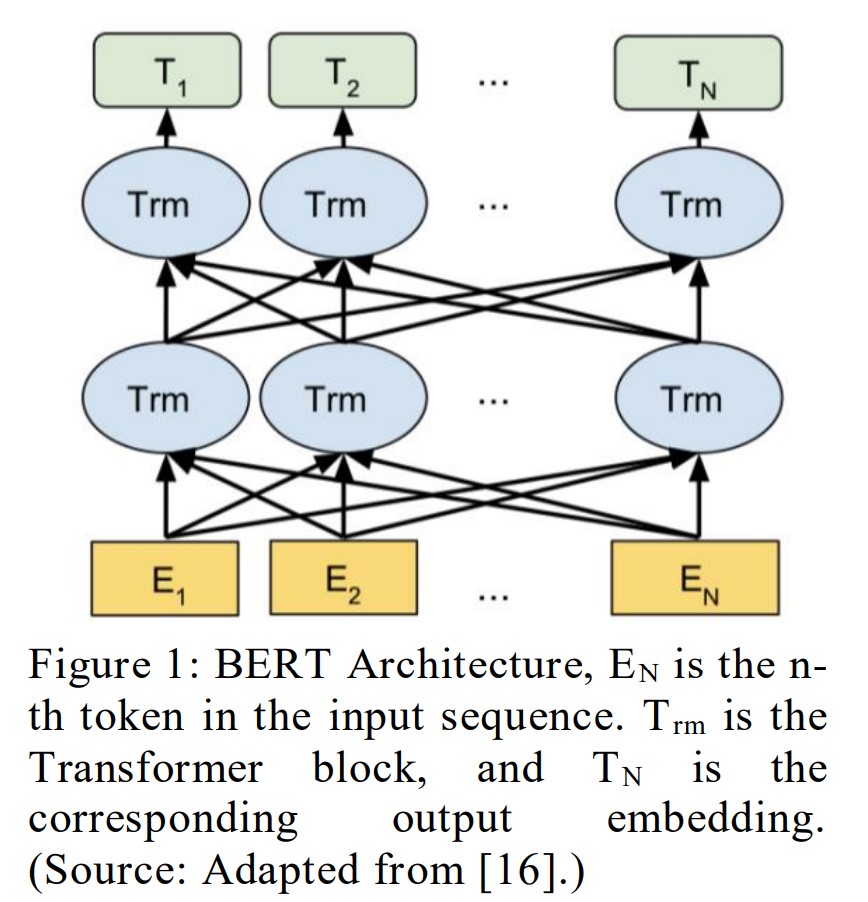

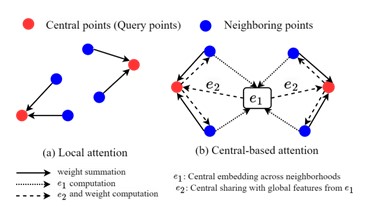

<p>In 3D scene understanding, segmentation tasks have a significant contribution in preprocessing and simplifying background, especially in real-world situations. Despite this crucial role in diverse practical applications, it causes high costs in labeling vast points, even in hundreds of millions per sample. To address this challenge, we propose an end-to-end transformer network designed for 3D weakly-supervised semantic segmentation. Different from prior methods, our network utilizes central-based attention, which processes purely on 3D points to resolve limited point annotations. Through integrating two embedding processes, the attention mechanism incorporates global features, thereby improving the representations of unlabeled points. In other words, our method establishes bidirectional connections between central points and their respective neighborhoods. To enrich geometric features and point position during training, we also implement position encoding. Point Central Transformer consistently reaches outstanding performance across various labeled point levels without requiring additional supervision. Experimental results on publicly available datasets such as S3DIS, ScanNet-V2, and STPLS3D underscore the superiority of our proposed approach compared to other state-of-the-art studies.</p>

</div>

</div>

</div>

</li>

</ol>

2023

-

KMMSLocal Attention in Weakly Supervised Point Cloud ProcessingIn 2023 Korean Multimedia Society Spring Conference Proceedings Volume 26, No. 1, 2023

2022

-

KMMSLocal Graph Transformer in Semantic Point Cloud SegmentationIn 2022 Korean Multimedia Society Fall Conference Proceedings, Volume 25, No.2, 2022

2021

2020

2019

–>